I want my students to know why and how the theories, tools and practices they will later use on a daily basis were conceived and spread, and a good 80% of them will participate in a policy evaluation in the next 10 years. This need also derives from my research program, aimed at understanding the transformation of applied economics between 1965 and 1985. Policy analysis is a large part of what economists mean by ”applying economics.” It is area of expertise most emphasized in the ongoing advertising campaign designed to reemphasize economists’ contribution to society. Understanding the applied turn thus requires examining to what extent policy had shaped the kind of tools and knowledge produced by economists, but I have trouble reconciling the various histories I have read so far.

On the one hand, there are the “backward” histories in which economists reconstruct the origins of their evaluation tools and practices. Economic evaluation is now a field clearly delineated, as exemplified by the standardizationof course syllabi: it covers structural econometrics, microsimulation, controlled experiments and natural experiments, with raging methodological controversies between proponents of “structural” estimation techniques and advocates of “reduced form” approaches. Each side has told its own story. James Heckman (in 1991, 2007, and 2010) traces the origins the the structural approach to the Cowles Commission econometricians’ belief that social knowledge was advanced enough to allow the estimation of basic behavioral relationships, which could be used to forecast the impact of policies which hadn’t been implemented yet.

Such method was then dramatically criticized and amended by Robert Lucas, who famously pointed that the parameters Cowlemen estimated depended on agents’ expectations, which in turn varied with policy rules shifts. Comparing the effects of alternative policies required the estimation of those policy-invariant “deep paramaters” found in strictly microfounded models (tastes and technology, for instance). This requirement however significantly complicated identification and made results very sensitive to model specification. As many economists, among whom David Hendry or Edward Leamer, voiced their concern with structural econometrics, an alternative set of techniques successfully implemented during the War on Poverty was spreading. The development of “randomized experiments” is usually traced to Ronald Fisher and Jerzy Neyman’s analysis of the determinants of agricultural productivity in the 1920s. In the wake of the controversial Coleman report in 1966, MIT graduate student Heather Ross then proposed to conduct a randomized experiment to evaluate the impact of negative income tax. The resulting New Jersey Income Maintenance Experiment was largely publicized by scientists and public officials alike, so that the design was then applied to evaluate other programs, including housing allowances. Randomized experiments were even made compulsory to evaluate training programs through the 1988 family Support Act (see details in Levitt and List, Manski and Garfinkel, or Haveman). Although many voices soon pointed the ethical and methodological limits of randomized controlled trials (external validity, general equilibrium effects among others), a third wave of experiments swept economics from the 1990 onward, with, Steven Levitt and John List say, greater empirical and theoretical ambitions. The J-Pal is often mentioned as its flagship, with application ranging from development to labor and poverty issues.

On the other hand, historians have fashioned “forward” histories of public expertise, emphasizing how Cost-Benefit Analysis (CBA) developed in the wake of the Flood Control Act of 1936, became part of the larger process RAND scholars designed for the Department of Defense during the Cold War, and spread after Lyndon B. Johnson decided that the Great Society, in particular the many War on Poverty programs, required a generalization of the Planning-Programing-Budgeting system (PPBS) (see for instance Porter, or Jardini). This vast and multidisciplinary literature examines the broad area of “policy science” or applied “policy expertise,” in which policy design and administration are often emphasized to the detriment of evaluation. It analyses the antagonism between top-down (PPBS) and bottom-up (Community Action Programs) approaches to policy-making, documents the rise of an independent “policy science” with its own graduate programs and institutes, and ties the transformation of policy expertise to switch in governmental regimes, from Cold War defense establishment to Johnson’s poverty activism and the subsequent rise of a neoliberal approach to the state. Some historical pieces also outlines the bigger trends underlining those narratives. In his forthcoming book, for instance, Will Thomas argues that scientists believed that their science’s association with technology and rationality made it de facto policy relevant, but while some scholars (and historians) point to the recurring difficulties to catch policy-makers attention and implement their ideas, other claim that postwar science has been an instrument of political power, as seen by the Cold War and Great Society rationales, or the rise of “modernization theory.” Marion Fourcade documents the “economicization of social policy,” pointing to the integration of micro tools and concepts (efficiency, incentives, opportunity, tradeoffs) in policy-makers’ discourse. She also investigates the differences in national policy traditions. She contrasts the American experimental orientation and its academic-based expertise with the British setting in which expertise is located in a network allowing for moves between academia, government bodies and think-thanks and the focus on efficiency is blended with a distributional concern (see also Pollitts). Her picture of France is one of fragmented and conflicting public expertise styles : the economists-engineers who invented cost-benefit in the late XIXth century, the “ENArchie” trained in the law/administrative tradition, and those academic scholars who tie policy analysis to a sociological deconstruction of institutions. These various strands are nevertheless similar in that policy analysis has traditionally been conducted by state-related experts for state management purpose.

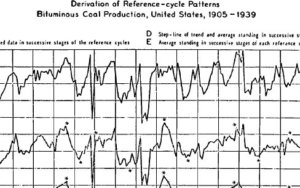

An exemple of PPBS, around 1971.

Now, my difficulty is with matching the supply-side stories of economists and the demand-side narratives of historians. Here are the gaps I would like to be filled.

- What were the relationships between economists, policy officials and professionals throughout? Beryl Radin argued that the history of policy analysis is pervaded by a conflict between the “world of analysis” and the “world of politics.” Does this applies to evaluation? Does this applies to economists? A related question is how economists’ perception of their relations to policy-makers shaped their practices. In an illuminating account of the application of Cost-Benefit techniques to water resources management during the 1960s, Spencer Banzhaf ties methodological conflicts to alternative visions of economic expertise. Those economists who emphasized the primacy of consumer sovereignty thought it was legitimate to collapse individuals’ valuations of the costs and benefits accruing to a policy into a single function and, on that basis, give advice to policy makers. Other scholars, including Robert Dorfman or Steven Marglin, believed that the electoral process had entrusted state representatives with some kind of political sovereignty. Weight the various objective of water policy (flood prevention, transportation, irrigation etc.) against another in the objective function was not economists’ job, and multi-objectives CBA should be the rule, they argued. In his recent history of the Value of Statistical Life, Banzhaf similarly explained that economists shaped different evaluation tools depending on whether they thought the Department of Defense or USAF pilots themselves were the best judges of the value of their lives.

- What was economists’ influence on the choice of evaluation procedures by policy-makers? And reciprocally, did policy-makers weight in any respect on the structural vs reduced form debates?

- What happened to Cost-Benefit Analysis? Historians explain that Johnson’s War on Poverty fostered both CBA and experimental evaluation techniques. Economists then discuss randomized experiments vs structural econometrics debates. CBA is altogether absent from their historical narratives, and increasingly taught in separate courses (such as Stanford’s policy analysis course). Lecturers explain that those techniques relate to distinct objectives, and should therefore be treated separately. Is the separate life of CBA a consequence of economists’ obsession with causality issues ? Was there a transformation is their approach to evaluation (efficiency vs causality?). Or are CBA and RCT used by distinct communities (economists vs policy administrators? Or Environment vs health specialists)?

- All in all, is there an overarching story of “economic policy evaluation,” or are differences across fields significant? I’ve just mentioned the case of environmental economics, but it seems that in the US, UK and France alike, education and health policy experts have a (much older) separate culture of evaluation. Also, how do these methodological debates play out in the much media-exposed field of development economics? How about transportation, urban economics, and so forth. Also, is the internationalization of economic techniques gradually erasing national evaluation traditions? Otherwise, how are those tools incorporated into dissimilar institutional settings?