The idea that economics has recently gone through an empirical turn –that it went from theory to data– is all over the place. Economists have been trumpeting this transformation on their blogs in the last few years, more rarely qualifying it. It is now showing up in economists’ publications. Not only in articles by those who claim to have been the architects of a “credibility revolution,” but by others as well. This narrative is also finding its way into historical works, for instance Avner Offer and Gabriel Söderberg’s new book on the history of the Nobel Prize in economics.

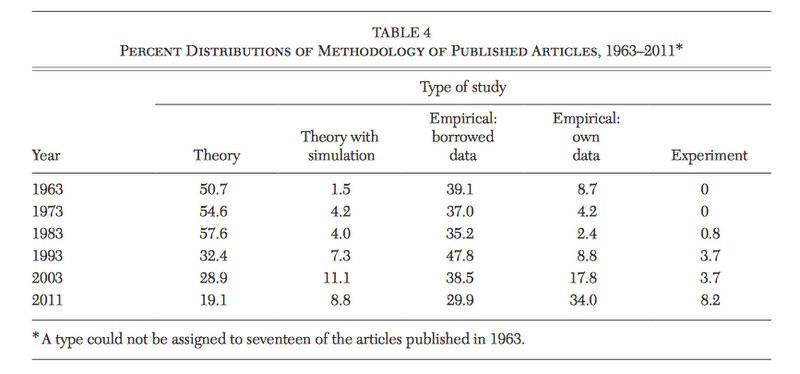

The structure of the argument is often the same. The chart below, taken from a paper by Dan Hamermesh, is used to argue that there has been a growth in empirical research and a decline of theory in economics in the past decades.

A short explanation is then offered: this transformation has been enabled by computerization + new, more abundant and better data. On this basis, the ” death of theory” or at least a “paradigm shift” is announced. The causality is straight, maybe a little too straight. My goal, here, is not to deny that a sea change in the way data are produced and treated by economists has been taking place. It is to argue that the transformation has been oversimplified and mischaracterized.

What is this transformation we are talking about? An applied rather than an empirical turn

What does Hamermesh’s figure show exactly? Not that there is more empirical work in economics today than 30 or 50 ago. It indicates that there is more empirical work in top-5 journals. That is, that empirical work has become more

prestigious. Of course, the quantity of empirical work has probably boomed as well, if only because the amount of time needed to input data and run a regression has gone from a week in the 1950s, 8 hours in the 70s to a blink today. But more precise measurement of both the scope of the increase and an identification of the key period(s?) in which the trend accelerated are necessary. And this requires supplementing the bibliometric work done on databases like Econlit, WoS or Jstor with hand-scrapped data on other types of economic writings. For economics has in fact nurtured a strong tradition in empirical work since at least the beginning of the XXth century, much of it being published outside the major academic journals. Whole fields, like agricultural economics, business cycles (remember Mitchell?), labor economics, national accounting, then input-output analysis in the 1930s to 1960s, then cost-benefit policy evaluation from the 1960s onward, and finance all the way up have been built not only upon new tools and theories, but also upon large projects aimed at gathering, recording and making sense of data. But many of these databases and associated research were either proprietary (when funded by finance firms, commercial businesses, insurance companies, the military or governmental agencies), or published in reports, books and outlets such as central bank journals and Brookings Papers.

Neither does Hamermesh’s chart show that theory is dying. Making such a case requires tracing the growth of the number of empirical papers where a theoretical framework is altogether absent. Hamermesh and Jeff Biddle have recently done so. They highlight an interesting trend. In the 1970s, all microeconomic empirical papers exhibited a theoretical framework, but there has since been a limited but significant resurgence of a-theoretical works in the 2000s. But it hasn’t yet matched the proportion of a-theoretical papers published in the 1950s. If there is an emancipation of empirical under way, economics is not there yet. All in all, it seems that 1) theory dominating empirical work has been the exception in the history of economics rather than the rule 2) there is currently a reequilibration of theoretical and empirical work. But an emancipation of the latter? Not yet. And 3) what is dying, rather, is exclusively theoretical papers. This characterization is closer to how Dani Rodrik describes the transformation the discipline Economic Rules:

“these days, it is virtually impossible to publish in top journals [in some fields] without including some serious empirical analysis […] The standards of the profession now require much greater attention to the quality of data, to causal inference from evidence and a variety of statistical pitfalls. All in all, this empirical turn has been good for the profession.”

The death of theory-only papers was, in fact, pronounced decades ago, when John Pencavel revised he JEL codes in 1988 and decided to remove the “theory” category. He replaced it with micro, macro and tools categories, explaining that “good research in economics is a blend of theory and empirical work.” And indeed, another word that has gained wide currency in the past decades is “applied theory.” The term denotes that theoretical models are conceived in relation to specific issues (public, labor, urban, environmental), sometimes in relation to specific empirical validation techniques. It seems, thus, that economics has not really gone “from theory to data,” but has rather experienced a profound redefinition of the relationship of theoretical to empirical work. And there is yet another aspect of this transformation. Back in the 1980s, JEL classifiers did not merely merge theory with empirical categories. They also added policy work, on the assumption that most of what economists produced was ultimately policy-oriented. This is why the transformation of economics is the last decades is better characterized as an “applied turn” rather than an “empirical turn.”

Applied has indeed become a recurring work in John Bates Clark citations. “Roland Fryer is an influential applied microeconomist,” the 2015 citation begins. Matt Gentzkow (2014) is a leader “in the new generation of microeconomists applying economic methods.” Raj Chetty (2013) is “arguably the best applied microeconomist of his generation.” Amy Finkelstein (2012) is “one of the most accomplished applied microeconomists of her generation” (2011). And so forth. The citations of all laureates since Susan Athey (“an applied theorist”) have made extensive used of the “applied” wording, and have made clear that the medal was awarded not merely for “empirical” work, new identification strategies or the like, but for path-breaking combination of new theoretical insights with new empirical methodologies, with the aim of shedding light on policy issues. Even the 2016 citation for Yuliy Sannikov, the most theoretical medal in a long time, emphasizes that his work “had substantial impact on applied theory.”

Is this transformation really new ?

The timing of the transformation is difficult to grasp. Some writers argue that the empirical turn began after the war, other see a watershed in the early 1980s, with the introduction of the PC. Other mention the mid-1990s, with the rise of quasi-experimental techniques and the seeds of the “credibility revolution,” or the 2010s, with the boom in administrative and real-time recorded microeconomic business data, the interbreeding of econometrics with machine learning, and economists deserting academia to work at Google, Amazon and other IT firms.

Dating when economics became an applied science might be a meaningless task. The pre-war period is characterized by a lack of pecking order between theory and applied work. The rise of a theoretical “core” in economics between the 1940s and the 1970s is undeniable, but it encountered fierce resistance in the profession. Well-known artifacts of this tension include the Measurement without Theory controversy or Oskar Morgenstern’s attempt to make empirical work a compulsory criterion to be nominated as fellow of the Economic Society. And the development of new empirical techniques in micro (panel data, lab experiments, field experiments) has been slow yet constant.

Again, a useful proxy to track the transformation in the prestige hierarchy of the discipline is the John Bates Clark medal, as it signals what economists currently see as the most promising research agenda. The first 7 John Bates were perceived as theoretically oriented enough for part of the profession to request the establishment of a second medal, named after Mitchell, to reward empirical, field and policy-oriented work. Roger Backhouse and I have shown that such contributions have been increasingly singled out from the mid-1960s onward. Citations for Zvi Griliches (1965), Marc Nerlove (1969), Dale Jorgensen (1971), Franklin Fisher (1973) and Martin Feldstein (1977) all emphasized contributions to empirical economics, and reinterpreted their work as “applied.” Feldstein, Fisher and later, Jerry Hausman (1985), are viewed as an “applied econometrician.” It was the mid-1990s medals – Lawrence Summers, David Card, Kevin Murphy – that emphasized empirical work more clearly. Summers’ citation notes a “remarkable resurgence of empirical economics over the past decade [which has] restored the primacy of actual economies over abstract models in much of economic thinking.” And as mentioned already, the last 8 medals systematically emphasize efforts to weave together new theoretical insights, empirical techniques and policy thinking.

All in all, not only is “applied” a more appropriate label than “empirical,” but “turn” might be a bit overdone a term to describe a transformation that seems made up of overlapping stages of various intensities and qualities. But what caused this re-equilibration and possible emancipation of applied work in the last decades? As befit economic stories, there are supply and demand factors.

Supply side explanations: new techniques, new data, computerization

A first explanation for the applied turn in economics is the rise of new and diverse techniques to confront models with data. Amidst a serious confidence crisis, the new macroeconometric techniques (VARs, Bayesian estimation, calibration) developed in the 1970s were spread alongside the new models they were supposed to estimate (by Sims, Kydland, Prescott, Sargent and others). The development of laboratory experiments contributed to the redefinition of the relationship between theory and data in microeconomics. Says Svorenčík (p15):

“By creating data that were specifically produced to satisfy conditions set by theory in controlled environments that were capable of being reproduced and repeated, [experimentalists] sought […] to turn experimental data into a trustworthy partner of economic theory. This was in no sense a surrender of data to the needs of theory. The goal was to elevate data from their denigrated position, acquired in postwar economics, and put them on the same footing as theory.”

The rise of quasi-experimental techniques, including natural and randomized controlled experiments, was also aimed at achieving a re-equilibration with (some would say emancipation from) theory. Whether it actually enabled economists to reclaim inductive methods is fiercely debated. Other techniques blurred the demarcation between theory and applied work by constructing real-world economic objects rather than studying them. That was the case of mechanism design. Blurred frontiers also resulted from the growing reliance upon simulations such as agent-based modeling, in which algorithms stand for theories or application, both or neither.

A second related explanation is the ” data revolution.” Though the recent explosion of real-time large scale multi-variable digital databases is mind-boggling and has the allure of a revolution, the availability of economic data has also evolved constantly since the Second World War. There is a large literature on the making of public statistics, such as national accounting or the cost of living indexes produced by the BLS, and new microeconomic surveys were started in the 1960s (the Panel Survey on Income Dynamics) and the 1970s (the National Longitudinal Survey). Additionally, administrative databases were increasingly opened for research. The availability of tax data, for instance, transformed public economics. In his 1964 AEA presidential address, Georges Stigler was thus claiming:

The age of quantification is now full upon us. We are armed with a bulging arsenal of techniques of quantitative analysis, and of a power - as compared to untrained common sense- comparable to the displacement of archers by cannon […] The desire to measure economic phenomena is now in the ascendent […] It is a scientific revolution of the very first magnitude.

A decade later, technological advanced allowed a redefinition of the information architecture of financial markets, and asset prices, as well as a range of business data (credit card information, etc.), could be recorded in real-time. The development of digital markets eventually generated new large databases on a wide range of microeconomic variables.

Rather than a revolution in the 80s, 90s or 2010s, the history of economics therefore seems one of constant adjustment to new types of data. The historical record belies Liran Einav and Jonathan Levin’s statement that “even 15 or 20 years ago, interesting and unstudied data sets were a scarce resource.” In a 1970 book on the state of economics edited by Nancy Ruggles, Dale Jorgenson explained that

“the database for econometric research is expanding much more rapidly than econometric research itself. National accounts and interindustry transactions data are now available for a large number of countries. Survey data on all aspects of economic behavior are gradually becoming incorporated into regular economic reporting. Censuses of economic activity are becoming more frequent and more detailed. Financial data related to securities market are increasing in reliability, scope and availability.”

And Guy Orcutt, the architect of economic simulation, explained that the current issue was that “the enormous body of data to work with” was “inappropriate” for scientific use because the economist was not controlling data collection. With a very different qualitative and quantitative situation, they were making the same statements and issuing the same complaints as today.

This dramatic improvement in data collection and storage has been enabled by the improvement in computer technology. Usually seen as the single most important factor behind the applied turn, the computer has affected much more than just economic data. It has enabled the implementation of validation techniques economists could only dreamed of in the previous decades. But a with economic data, the end of history has been repeatedly pronounced: in the 1940s, Wassily Leontief predicted that the ENIAC could soon tell how much public work was needed to cure a depression. In the 1960s, econometrician Daniel Suit wrote that the IBM 1920 enabled the estimation of models of “indefinite size.” In the 1970s, two RAND researchers explained that computers had provided a “bridge” between “formal theory” and “databases.” And in the late 1980s, Jerome Friedman claimed that statisticians could substitute computer power for unverifiable assumptions. If a revolution under way, then, it’s the fifth in fifty years.

But the problem is not only with replacing “revolutions” with more continuous processes. The computer argument seems more deeply flawed. First, because the two most computer-intensive techniques of the 1970s, Computable General Equilibrium and large-scale Keynesian macroeconometrics, were marginalized at the very moment they were finally getting the equipment needed to run their models quickly and with fewer restrictions. Fights erupted as to the proper way to solve CGE models (estimation or calibration), Marianne Johnson and Charles Ballard explain, and the models were seen as esoteric “black-boxes.” Macroeconometric models were swept away from academia by the Lucas critique, and found refuge in the forecasting business. And what has become the most fashionable approach to empirical work three decades later, Randomized Control Trials, merely requires the kind of means and variances calculations that could have been performed on 1970s machines. Better computers are therefore neither sufficient nor necessary for an empirical approach to become dominant.

Also flawed is the idea that the computer was meant to stimulate empirical work, thus weaken theory. In physics, evolutionary biology or linguistics, computers transformed theory as well as empirical work. This did not happen in economics, is spite of attempts to disseminate automated theorem proving, numerical methods and simulations. One explanation is that economists stuck with concepts of proof which required that results are analytically derived rather than approximated. With changes in epistemology, the computer could even made any demarcation between theory and applied irrelevant. Finally, while hardware improvements are largely exogenous to the discipline, software development is endogenous. The way computing affected economists practices was therefore dependent on economists’ scientific strategies.

The better integration of theoretical and empirical work oriented toward policy prescription and evaluation which characterized the “applied turn” was not merely driven by debates internal to the profession, however. They also largely came in responses to the new demands and pressures public and private clients were placing on economists.

Demand side explanation: new patrons, new policy regimes, new business demands

An easy explanation to the rise of applied economics is the troubled context of the 1970s: the social agitation, the urban crisis, the ghettos, the pollution, the congestion, the stagflation, the energy crisis and looming environmental crisis, the Civil Right movement resulted in the rise of radicalism and neoconservatism alike, students’ protests and demand for more relevance in their scientific education. Both economic education and research were brought to bear on real-world issues. But this raises the same why is this time different? kind of objection mentioned earlier. What about the Great Depression, World War II and the Cold War? These eras pervaded by a similar sense of emergency, a similar demand for relevance. What was different, then, was the way economists’ patrons and clients conceived the appropriate cures for social diseases.

Patrons of economic research have changed. In the 1940s and 1950s, the military and the Ford Foundation were the largest patrons. Both wanted applied research of the quantitative, formalized and interdisciplinary kind. Economists were however eager to secure distinct streams of money. They felt that being often looped together with other social sciences was a problem. Suspicion toward social sciences was as high among politicians – isn’t there a systematic affinity with socialism– as among natural scientists –social sciences can’t produce laws. Accordingly, when the NSF was established in 1950, it had no social science division. By the 1980s, however, it has become a key player in the funding of economic research. As it rose to dominance, the NSF imposed policy-benefits, later christened “broader impact,” as a criterion whereby research projects would be selected. This orientation was embodied in the Research Applied to National Needs office, which, in the wake of its creation in the 1970s, supported research on social indicators, data and evaluation methods for welfare programs. The requirement that the social benefits of economics research be emphasized in applications was furthered by Ronald Reagan’s threat to slash the NSF budget for social sciences by 75% in 1981, and the tension between pure and applied research, and policy benefits has since remained a permanent feature of its economic program.

As exemplified by the change in NSF’s strategy, science patrons’ demands have sometimes been subordinated to changes in policy regimes. From Lyndon Johnson’s to Reagan’s, all government pressured scientists to produce applied knowledge to help the design, and, this was new, the evaluation of economic policies. The policy orientation of the applied turn was already apparent in Stigler’s 1964 AEA presidential address, characteristically titled The Economist and the State: “our expanding theoretical and empirical studies will inevitably and irresistibly enter into the subject of public policy, and we shall develop a body of knowledge essential to intelligent policy formulation,” he wrote. Henry Ford’s interest in having the efficiency of some welfare policies and regulations evaluated fostered the generalization of cost-benefit analysis. It also pushed Heather Ross, then MIT graduate student, to undertake with Princeton’s William Baumol and Albert Rees a large randomized social experiment to test negative income tax in New Jersey and Pennsylvania. The motivation to undertake experiment throughout the 70s and 80s was not so much to emulate medical science, but to allow the evaluation of policies on pilot projects. As described by Elisabeth Berman, Reagan’s deregulatory movement furthered this “economicization” of public policy. The quest to emulate markets created a favorable reception to the mix of mechanism design and lab experiments economists had developed in the late 1970s. Some of its achievements, the FCC auction or the kidney matching algorithm have become the flagship of a science capable to yield better living. These skills have equally been in demand by private firms, especially as the rise of digital markets involved the design of pricing mechanisms and the study of regulatory issues. Because it is as much the product of external pressures as of epistemological and technical evolutions, it is not always easy to disentangle rhetoric from genuine historical trend in the “applied turn.”

Note: this post relies on the research Roger Backhouse and I have been carrying in the past three years. It is an attempt to unpack my interpretation of the data we have accumulated, as we are writing up a summary of our finding. Though it is heavily influenced by Roger’s thinking, it only reflects my own views.