Episode I and Episode II; Background chronology of economics at Carnegie.

While they’ve used it primarily as a metaphor to describe their practices (formalization, modeling, market design, problem solving, policy expertise), historians have documented the substantial impact engineering as a science, practice or culture had on the formation of economics since the 19th century. For instance, they have studied how engineering was instrumental in shaping the “New Economics” economists built at MIT between the 1940s and the1960s. In a workshop organized by fellow blogger Pedro Duarte on the history of macroeconomic dynamics last summer, Judy Klein and Esther-Mirjam Sent presented new evidence of how New Classical macroeconomic modeling was heavily influenced by the Carnegie engineering mindset (video of their talks here). In particular, they show how Lucas, Prescott and their colleagues built upon the Cold War “modeling strategies” previously devised by economists and engineers under the supervision of the military.

Klein’s take on the rise of New Classical economics is especially interesting. She aims at explaining how the demands of military patrons in the context of the Cold War turned some branches of mathematics into a “science of economizing.” The solutions yielded by production planning or warfare models had to be expressed as simple, e.g. implementable and computable decision rules. This requirement forced applied mathematicians, engineers and economists working on such projects to rethink their “modeling strategies.” Klein thus demonstrates that the “modeling strategies” Carnegie researchers crafted for their clients proved especially fit for economics use : computational and practical constraints shaped Bellman’s dynamic programming techniques, Simon’s certainty-equivalence theorem and Muth’s notion of rational expectations, three pillars of New Classical macrodynamics.

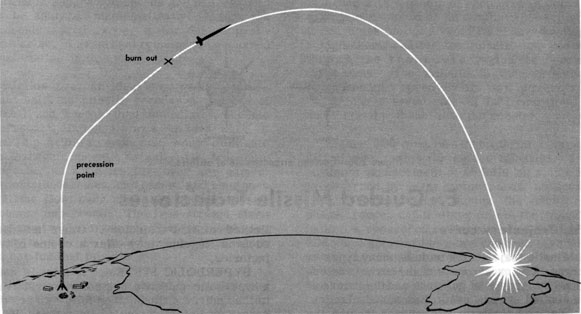

Bellman’s research interests evolved hand in hand with the warfare between the US and the USSR, Klein explains. His first assignment was to work on the best allocation of the 9 US nuclear bombs in case of a strike. The solution had to be designed as a decision rule telling the bomber how to reoptimize after each move to inflict the maximum damage to the enemy. As bombs, missiles and other war equipments multiplied in the second half of the 50s, Bellman switched to inventory control and the determination of optimal military production in the face of uncertain demand. In the wake of the launch of Sputnik, he concentrated on the design of optimal control trajectories for fuses and missiles. Again, his models were constrained by the requirement that the resulting decision rules accommodate uncertainty, be operational and quickly computable. It was in this context that he fashioned his dynamic programming method, a more tractable alternative to the refined type of calculus of variations enabled by the 1962 translation of Pontryagin’s book. Bellman envisioned his clients’ control issue as a multi-stage decision process, in which the state of the environment at each stage determined which control variables should be used and how (the policy decision). He managed to show that solving for the optimum value of the objective function was equivalent to solving for the optimal policy and then derive the value of the function, a protocol much easier to compute and more useful for his clients.

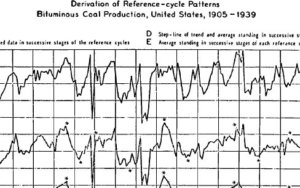

Bellman’s protocol was immediately implemented by those economists and engineers recruited at the newly established Graduate School of Industrial Administration of the Carnegie Institute of Technology. During the fifties, the Air Force, then the Office of Naval Research asked Herbert Simon, former engineer Charles Holt, Franco Modigliani and William Cooper to devise decision rules aimed at minimizing some plants’ production and inventory costs. Those rules had to be implementable by non-technicians and easily computable, so that approximate solutions were preferred to abstract solutions. As a result, the Carnegie team worked in the spirit of “application-driven theory,” in Cooper’s words: you start with a problem, you develop a solution, and then the theory comes from the generalization of such solution. In particular, they resorted to reverse engineering: knowing from previous work on servomechanism that the use of Laplace transforms made calculus much easier, they looked for the cost function amenable to such results, and found out that it had to exhibit a quadratic form. The quadratic cost function had yet another virtue. Since the models had to include some forecast of the (uncertain) future demand, Simon demonstrated that, in the case of a quadratic objective function, uncertain state variables could be approximated by their unconditional expectation. This certainty equivalence theorem spared the modeler the task of handling the whole probability distribution of future variables and performing long and complex computation. Reflecting of his team’s modeling strategy, Simon came up with the idea that, just like scientists, those entrepreneurs confronted with limited information and computation abilities were not maximizing, but looking for satisfying rules of behavior. They thus exhibited bounded rationality. One of Simon’s PhD student working on the project, John Muth, however interpreted their experience in an opposite way. While Simon had concluded that economic models assume too strong a form of rationality, Muth argued that “dynamic economic models do not assume enough rationality.” Entrepreneurs’ expectations, “since they are informed predictions of future events, are essentially the same as the predictions of the relevant economic theory,” he famously explained in 1960.

While Pontryagin’s type of dynamic optimization was immediately translated into the growth theoretic modeling developed at Stanford and MIT, Bellman’s dynamic programming, combined with Kalman filtering, rational expectations, and the certainty equivalent theorem, was the “modeling strategy” chosen by Carnegie economists. Lucas first used Muth’s framework in a 1966 paper aimed a studying individual investment decisions, then in his and Prescott’s 1971 model of firms facing random shifts in the industry demand curve. Bellman’s protocol enabled them to determine the optimal time path of investment and prices, a technique subsequently spread in macroeconomics works. As Esther-Mirjam Sent emphasized, opposing “freshwater” science to “saltwater” engineering is thus misleading: both modeling styles heavily drew upon modeling strategies initially developed for engineering purposes.

Klein’s catchword “modeling strategy” is extremely important in that story. It conveys what the turn to rational expectations was for those Carnegie economists immersed in a Cold War engineering zeitgeist. That economic agents were viewed as a collection of decision rules reflected modelers’ daily practice. The normative decision rules Carnegie economists developed for their military and private clients eventually became a positive representation of how economic agents behave, so that it made sense to assume that a communism of models existed between the modelers and the agents. The modeling strategies chosen by New Classical economists had been previously designed for practical purpose, and their specific pattern therefore framed economists’ research and policy conclusions. The stabilizing quality of agents’ rules of behavior was built in those models exhibiting a new kind of rationality and a multistage decision process, while Pontryagin types of protocols led modelers toward alternative notions of equilibrium and thus, diverging policy prescriptions. All this suggests that the modeling and policy aspects of the macro debates raging in the 70s and 80s are ultimately impossible to disentangle.