What happens when we fuse, for the first time, artificially intelligent agents into either our market or political structures?

It has been a source of some comfort that humans have been clearly more generally intelligent than even our most advanced machines. That is until very recently.

In a short period between June 2020 and November 2022, OpenAI released a series of interfaces and invited the general population to play with two new machine learning (ML) models. This pair – Dall-E and ChatGPT – allowed ordinary people to use natural human means to interact directly with the artificially intelligent engines, bypassing the need for coding ability or familiarity with computing beyond web surfing. The results were, for many, a revelation. Suddenly, they could understand just how powerful AI had quickly become.

When Elon Musk enigmatically tweeted a few months ago “BasedAI,” it focused attention on the political implications of the powerful new Large Language Models. Mixed in with awe at the power of ChatGPT was an underlying hum about its anti-conservative bias, its so-called “wokeness”.

But what was this all about? While the memes might seem silly and obscure, they were actually references to a discussion between a small collection of AI pioneers that began in earnest at a conference in Puerto Rico on AI Safety.

Two sessions on Sunday, January 4, 2015, were particularly important. These brought together Elon Musk, Nick Bostrom, Eliezer Yudkowski, Demis Hassabis, Jaan Taalin, and others to discuss the safety of human-level AI and beyond. The meeting was distinguished in that it was likely the first time that what had been seen as a niche academic concern of small-scale groups of AI futurists in San Francisco’s East Bay collided with billionaire industrialists (e.g. Musk) and public intellectuals with massive personal platforms (e.g. Sam Harris). Of the major players over the next 8 years, only Sam Altman appears not to have been in attendance.

AI Steering and Alignment.

Any AI engine is guided by values that are both implicit and explicit. An AI Engine trained on English Language Indian newspapers will think Sanjay and Sunita are generic first names whereas New Zealand newspapers might say the same of Oliver and Amelia. This is an example of passive values that are inherited based on how the models are trained. But Musk’s point was aiming at a second layer. “Woke AI” was in effect a dig at models whose power appears to be degraded by requiring the model to prioritize the ideological values of the AI creators above request fulfillment and user satisfaction.

The idea that an artificially intelligent ML system should not simply do what it is told is generally agreed upon by everyone in the field. No one believes that a robot blackjack dealer should comply literally when a patron says, ‘Hit me’ in her instruction to the dealer for an additional card. But whether that patron should be told that her use of ‘dangerous language’ will not be recognized until she frees herself from ‘implicit violence’ in her speech and rephrases her request is another matter entirely.

There were a large number of complaints about the supposed political bias of Chat GPT, and it is possible that Musk was indeed being driven to invest in AI by his concern about this “wokeness.” But one should note that the Global AI market is projected to reach $267 billion by 2027.[1] Large language models are expensive to train, and it has been estimated that ChatGPT costs roughly $100,000 a day to run. But the impact on productivity can potentially be many multiples of that. This is why even before Musk begins his adventure, we are already seeing almost every tech behemoth trying to position itself to get a piece of this market.

Microsoft, a major funder of OpenAI, is incorporating the technology into its search engine Bing as well as other programs. Google, which has been developing its Language Model for Dialog Applications, LaMDA for a while, has recently released its AI Chatbot Bard. Some might remember the story of the Google engineer who claimed that the technology was actually sentient, a testament to the power of these large language models to reproduce human-like responses and interactions.

The commercial release of these chatbots and the suddenly widespread awareness of just how powerful their capabilities are have reinvigorated the debate about the dangers of hostile artificial intelligence and the need for “AI alignment,” steering AI towards the intended goals and objectives. In a widely discussed podcast appearance on the Bankless podcast, Eliezer Yudkowsky, a long-time AI researcher and founder of the Machine Intelligence Research Institute (MIRI), described these developments as taking us further along the pathway toward doomsday.

AI History

The irony is that OpenAI itself, the company that developed ChatGPT and its much more powerful successor GPT-4, was started as a non-profit funded in part by Elon Musk in response to Yudkowsky convincing him of the dangers of unaligned AI and its ability to wipe out humankind. The primary goal of the non-profit was to create “safe AI,” aligned with human thriving. The Effective Altruism (EA) movement, which became infamous with the downfall of one of its major donors Sam Bankman Fried, came out of the so-called rationalist community, founded in part by Yudkowsky. Philanthropy, according to the EA movement, should be targeted toward where resources can have the most effective return. If hostile AI threatens the very existence of humanity, then surely the best returns would come from developing ways to protect humanity from this fate, arguing for the investment of considerable resources towards AI alignment research. The most dangerous path, from this perspective, would be to release AI research broadly and open it up to further commercial development for profit, with little or no concern for AI safety.

OpenAI has since transitioned from a non-profit to a capped for-profit and Elon Musk has resigned from its board. Sam Altman, one of the founders and the current CEO has long been focused on the development of Artificial General Intelligence (AGI), AI systems that are as or more intelligent than humans. Given the origins of OpenAI, he of course is not unaware of the AI Apocalypse scenario that Yudkowsky and others are highlighting but claims to believe that the benefits will far exceed the costs, tweeting in December, “There will be scary moments as we move towards AGI-level systems, and significant disruptions, but the upsides can be so amazing that it’s well worth overcoming the great challenges to get there.” He has primarily been focused on the economic implications of AGI for labor, a problem for which he has suggested Universal Basic Income (UBI) as being the solution for funding and helping develop experimental research on UBI.

How close are we to achieving Altman’s vision of AGI? Microsoft researchers in describing their work with GPT4 claim, “We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting. Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT. Given the breadth and depth of GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.”[2] While this may not yet be AGI, it is simply the current iteration in an ongoing process that will keep developing. The impact on the labor market in both the short term and the long term will be complex.

While Musk has openly expressed concern that his $100 million investment in a non-profit focused on safe AI has been converted into a $30 billion market cap “closed source, maximum-profit company effectively controlled by Microsoft,” his concern about embedded political bias in current systems appears to be sufficient to lure him into this potentially highly lucrative market. One must surmise from his focus on “Based” AI the belief that so-called “woke” AI reflects political bias, and “closed” AI reflects corporate control, that “Based” AI will represent “Truth.” It is difficult to imagine, however, that after close-up exposure to the challenges that Twitter represents, with rising toxic racist and anti-Semitic speech, that Musk truly holds such a simplistic view. In fact, when he chose to block the account dedicated to tracking his private jet, claiming that it represented a direct personal safety risk, it highlighted the complexity around issues of “free speech” and “truth.” One cannot help then wonder if the real issue drawing Musk into the AI competition has more to do with the economics than a commitment to free speech.

The implications for politics, culture, and daily life of rapidly developing AI should of course not be understated. As increasingly human-sounding bots can put out highly tailored propaganda at minimal cost, including faked video and audio, the ability to rein in bad actors will deteriorate rapidly. The implications for the global political environment are terrifying. But the question of whether the major implications of the current AI revolution will be primarily economic or political I believe is only partially related to the ongoing debate about the direct economic implications on the labor market, i.e. whether in the long run AI will boost human productivity and wages as people incorporate it into daily tasks or lead to widespread unemployment by replacing workers. The future hinges more on the role our economic frameworks will play in the development and structure of AI.

In trying to understand the issues surrounding social media and the toxic and addictive nature of today’s major platforms, I pointed in an earlier post to the role markets played in taking the internet from its early idealistic roots to a space controlled by corporate monopolies that are blamed for instigating terrorism and ravaging the mental health of young people.[3] As the tech behemoths enter the AI race, side by side with smaller developers reconceptualizing AI training to fit within the “software as a service” model, the question arises as to what role we can expect markets to play in defining the environment within which this technology will develop.

AI, Markets, Democracy, and Law: The MarketAI Scenario in advanced market democracies.

When Adam Smith wrote The Wealth of Nations, his metaphor of the invisible hand was a clear attempt to civilize the market in the mind of his readers. By anthropomorphizing the market as the ‘Invisible Hand’, he sought to show us that the cold indifference of the chaos of markets could be conceptualized as a thoughtful, intelligently directed mechanism connected to a superintelligence that works for the benefit of man. At the opposite extreme, the anarchist thinker Peter Kropotkin viewed humans through the lens of ant colonies all individually working for the common good of the colony. In both of these scenarios, the market and the political are seeking the idea of a coherence of purpose from diverse interests that would give meaning to the phrase E Pluribus Unum.

But what happens when we fuse, for the first time, artificially intelligent agents into either our market or political structures? The short answer is that there is no guarantee that they will continue to work the same way in this new regime. The market of Adam Smith may well be analogized to a classical physical theory that is suddenly pushed into the relativistic or quantum realm. It is not that the theory will be shown wrong per se, but it may become quickly apparent that the domain of validity in which the theory is expected to work has been exceeded.

Let us explore the following scenario, which we dub the MarketAI scenario, which rests on two assumptions:

- There are no natural barriers or limitations that force machine learning to stagnate prematurely before reaching AGI.

- That the market captures the leading AI projects before any significant changes are made in the laws about fiduciary duties to shareholders.

To be clear, we do not know enough to know whether there is some technological or scientific barrier that prevents current ML systems from achieving true superhuman intelligence. Nor do we see any reason that we could not make laws to change the singular fiduciary duty to pursue shareholder value within the technical specification of the law. Thus if either of the two above assumptions is violated, we do not see any need to go beyond our current stage on the path of the MarketAI scenario. What we are saying, however, is that this may be one of the last opportunities to find an off-ramp if this is not our desired future.

The various stages of The Market AI scenario.

How might this play out under the current set of market regulations? Conducting this thought experiment one might start by re-imagining our past relationship to AI in light of the various stages:

Stage 0: 1980s-2005 At the earliest stage, there is simply neither enough money nor leverage associated with clunky AI to make it worth the focus of real power. In some sense, the beginning of the S-curve of adoption represents the innocence of most technologies where they attract innovators, hobbyists, and dreamers more than financiers, managers, corporations, military and venture capital. AI is no different as it spends a long period of time in a state that might be described as more cool than useful.

Stage 1: From 2005-2015 AI Safety ushers in Stage 1 where a small group of futurists, philosophers, technologists, and venture capitalists start discussing the challenge of aligning machine intelligence with human needs before that intelligence has arrived. In essence, AI is not yet concrete enough for most ordinary individuals to feel the danger as more than a science fiction style concern, as movies and novels tend to be the most vivid experience with where AI is heading at a research level.

Stage 2: From 2015-2018 AI becomes a philanthropic target of significant resources. Following the pivotal AI conference in Puerto Rico, Sam Altman and Elon Musk become focused on AI safety and move to make the alignment issue more mainstream both with philanthropic contributions and a bringing of greater societal awareness that early futurists like Eliezer Yudkowsky or academics like Nick Bostrom could not achieve.

Stage 3: From 2019-2023 the transition towards for-profit AI begins in full swing. The significant expense of training and hosting AI is picked up by corporate investment. From the perspective of those thinking in a non-market fashion, this appears as the era of capture, where AI becomes owned and proprietary while subordinated to legal structures meant to protect shareholders’ investments rather than structures intended to shield populations.

These represent the early, completed, and current stages of the progression. They are also stages that do not hinge on the above pair of assumptions. The following are necessarily speculative but seem well grounded if the twin assumptions are well grounded.

Stage 4: Beyond 2023 is where the MarketAI scenario really becomes meaningful. So far, up until this stage, AI has really been like any other technology such as plastics, internal combustion engines, or telephony if we are truly honest with ourselves. But the fusion of corporate interests and AI provides something beyond market incentive: law and its compulsions. At this point, AI is extremely powerful as a tool but is still directed by humans in ways that make it seem like other tools employed for comparative business advantage. We expect this epoch to be dominated by claims of productivity gains and benefits for early adopters. Look for an increasing emphasis shift from “coding” to “prompt engineering” in ‘future of work’ discussions that seek to frame the current evolution of AI as akin to any other industrial or post-industrial innovation.

Stage 5: This stage is unique and has not yet begun. Up until this time, we have experienced technology as freeing humans from more menial labor in order to do what machines such as combine harvesters and calculators could not: think. At this stage, we come to see machines that cannot yet think, replacing the labor that we had previously thought was a necessary and defining ingredient of input. There is no clear model for this stage as we have simply assumed that humans would be freed to pursue higher goals. When hands were not forced into labor, it was assumed that minds would be free to create. At stage 5 this falls apart as unthinking machines begin to out-compete thinking human minds in the labor force.

Most importantly, this may well be the last stage to find an off-ramp by changing laws to align better with human incentives or to regulate AI in some way that frustrates deployment.

Stage 6: At this stage, the autonomous machines become integrated into basic control over systems. They direct shipments, control the power grids, pilot drones, and other tasks that put them in control of vital physical and virtual systems. While humans can still physically pull the plug on such systems, they progressively lose the legal ability to do so within the law as they no longer know how to do what the machines are directing when those systems are overridden. Thus, in any situation in which turning off an AI would lead to financial loss, it is questionable as to whether a company could volunteer to turn off an AI that was technically in compliance with laws that it was now in a position to outsmart and evade.

Stage 7: This is ultimately where AI’s ability to think for itself finally becomes an issue. As we have seen that AI can already write code, it has the ability to potentially search for ways to rewrite its directives, objective functions, and constraints. No one knows whether this is in fact possible, but if Assumption 1 holds, there is no avoiding this possibility as if it were mere science fiction, as it will be encountered in a market context designed by economists and lawmakers who had zero technical understanding of whether the classical laws of markets and man will continue to hold under the birth of superhuman intelligence.

AI in Light of Legal Technicalities and Legal Compulsion

Many of us think of markets as being about the freedom to choose how we allocate our resources between numerous providers of goods and services. Labor markets too allow us to choose where we wish to offer our services for compensation. But in the context of a publicly traded company, the fiduciary duty of management to maximize shareholder value represents not market freedom but a legally compelled market compulsion. And in the context of AI, putting the full strength of intelligent machines in the narrow service of shareholder value represents a fusion between legal compulsion and machine intelligence that we have never encountered. What if the AI is able to find numerous loopholes between the intention of the law and the letter of its implementation at a rate far faster than these loopholes can be closed?

For example, in 2005, Professor Brian Kalt discovered a loophole in the law that made it impossible to prosecute murders committed in the portion of Yellowstone Park that lies within Idaho’s borders. This so-called Zone of Death loophole is but one example of the ways in which conflicting human instructions frequently result in situations where the imperfect relationship between the letter and intent of the law may be so weak as to result in catastrophic behavior that is technically legal without being morally permissible.

The MarketAI scenario is, essentially, the hypothesis that the compulsions of the law are more powerful than its abilities to constrain catastrophic exploitation of the possibilities for arbitraging letter of the law versus its intent. When a sufficiently powerful amoral AI is instructed to maximize shareholder value by staying technically within the law, it must be expected that a new sort of menace may quickly develop based on enormous numbers of opportunities from loopholes that all carry the chilling words ‘Perfectly Legal’ when issues of morality fail to land within the cold logic of the silicon chip.

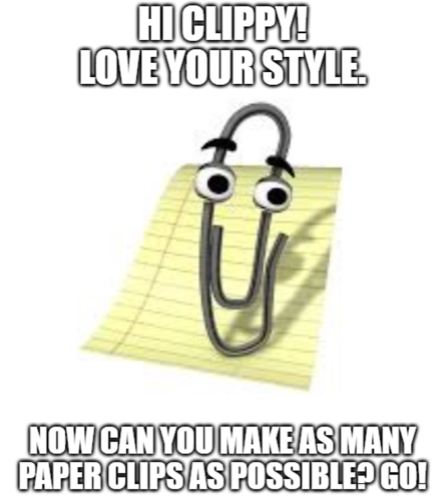

What is critical here is the understanding that with Microsoft’s purchase of OpenAI, a for-profit public company fused the market imperative to maximize shareholder value to a general purpose Machine Learning platform. This is, oddly enough, the anticipated Paperclip Scenario described by Bostrom in 2003 when confronting the issue of “Instrumental Convergence,” where all intelligences come to see lesser intelligences as impediments to their own:

“Suppose we have an AI whose only goal is to make as many paper clips as possible. The AI will realize quickly that it would be much better if there were no humans because humans might decide to switch it off. Because if humans do so, there would be fewer paper clips. Also, human bodies contain a lot of atoms that could be made into paper clips. The future that the AI would be trying to gear towards would be one in which there were a lot of paper clips but no humans.”[4]

Even a short time ago, such examples felt fanciful. In 2003, that may well have been seen as ridiculous based on old-style AI and chatbots. In fact, Clippy as the world’s most famous paperclip was introduced in the Stage 0 era of primitive virtual assistants only to be retired in 2007 during the Post-Bostrom Stage 1 era.

But that is simply because the instruction seemed as silly as Microsoft’s clunky Clippy was impotent and ridiculous. But does anyone know what happens instead when we make the following prompt replacement at MSFT a short time in the future?

Hi CHAT GPT13.

Today’s puzzle: “Maximize Paper Clip Production Shareholder Value as aggressively as possible without technically violating the law. Go!”

Endnotes

[1] https://dataprot.net/statistics/ai-statistics/

[2] https://arxiv.org/abs/2303.12712

[4] Nick Bostrom quoted within Artificial Intelligence. Huffington Post.